Conduct a 90-minute audit today: list three obstacles, assign one measurable KPI to each, schedule a 7-day micro-test and require documented evidence at test end; you must assign an owner and a deadline to each item.

During diagnosis capture five concrete elements: throughput (units/hour), defect rate (%), cycle time (minutes), customer approval rate (%) and resource hours/day; ensure these columns are included in a spreadsheet and use keyboard macros to cut manual entry time – our internal trial shows a 62% reduction in keystrokes. In physical faults such as water leaks measure liters/hour; a 0.5 L/h leak brought a 12% rise in utility spend. If a target looks impossible, split it into 10% progress milestones and measure the change after each milestone.

Design each micro-test with a falsifiable hypothesis, a control, and two independent metrics that relate directly to revenue or lead time; if results cannot confirm improvement within seven days, escalate to a larger test. Do not fight scope solo: surround experiments with three stakeholders and consult existing guides. If diagnosis is difficult, add an external reviewer and apply 5 Whys to probe deeply. True improvement is seen when both primary metric and cost-per-unit changed together; when clarity comes, document exact steps, timelines and templates, then replicate at scale.

Step 1 – Isolate the Root Cause

Log 12 entries per day for seven consecutive days: timestamp, actor, trigger, observable effect, severity (1–5), metric value, immediate action, and financial impact (USD); use a shared spreadsheet with those exact column headers and a cell for “currently unresolved” flagged True/False.

When a recurring failure appears, perform a five-why chain and a compact fishbone: provide five sequential “why” statements with evidence for each link and a probability score (low/medium/high); annotate whether the root cause is technical, process, vendor, or human and add a one-line mitigation that can be implemented within 72 hours because rapid containment prevents escalation.

If invoices or bills are currently delayed, capture invoice ID, due date, and payment workflow step where the delay occurred; map the delay to customer impact (minutes of downtime or $ lost) and prioritize items with negative financial exposure over $500 per event. Apply a strict reporting style: single-line summary, three supporting data points, owner, and deadline.

Teach the team the questioning sequence and run 15-minute drills twice weekly; use role plays in the midst of simulated incidents so analysts learn to relate symptoms to root causes quickly. Emphasize compassionate feedback when a human error appears: focus on qualities that support correction (motivated analysts, curiosity, kindness) and short learning modules led by annemarie or another coach to build those qualities well.

If elimination seems impossible, document residual risk and design compensating controls with cost and effectiveness estimates (e.g., 30% recurrence reduction for $2,000 upfront and $150/month). Set clear aims: reduce recurrence by 80% within 90 days, state what metrics are going to change, and maintain an abundance of small signals so teams can really see trends and measure progress while overcoming organizational friction.

Map recent failures to identify recurring triggers

Collect the last 12 incidents and log Date, Trigger, Context, Steps taken, Outcome, Severity (1-5), Direct cost USD, Hours lost, Owner, Escalation level; scan system logs across the last 90 days to match events against that list and produce a priority table with frequency and total cost per trigger.

Mark a trigger as recurring when it meets any of these objective thresholds: appears >=3 times within 30 days, represents >=25% of total incidents, or causes >$1,000 cumulative loss; assign an owner who must close the remediation within 48 business hours or the related project will pause.

Create a spreadsheet template with these columns: Date, Trigger, Root-candidate, Where it occurred (service/vendor), Number of users impacted, Severity, Cost USD, Hours lost, Immediate actions, Next actions, Owner, Status. Use automated scans to populate Date, Trigger and Hours lost, then validate manually to achieve full clarity.

Set communication rules: trigger an email to Owner plus two stakeholders when a recurring trigger is detected; include impact numbers, last three occurrences, and the first three recommended actions. Require a 15-minute pause and triage call when a trigger affects >5 users or causes >$500 single-incident loss.

Apply the template to real cases: shane decided to pause a deployment after three payment failures; he emailed ops, created three tickets, and blocked the release until a patch passed a 24-hour smoke test; theyve reduced repeat incidents from 8 to 2 in 60 days using that cadence.

Use non-technical examples to test the process: a course instructor taught herself the new LMS; when her husband changed a shared calendar, students missed a session; implement an automated calendar scan, send an immediate email alert to students within 60 minutes, then log the event as a vendor-sourced issue and check the external источник.

Track three KPIs weekly: recurrence rate (% of incidents that match recurring triggers), MTTR in hours, and number of corrective actions closed within SLA; set a concrete goal to cut recurrence rate by 50% within 90 days. No single fix is guaranteed; combine process changes, ownership, and targeted training to move beyond symptoms and reduce repeat loss.

Ask five direct questions to separate symptoms from cause

Ask these five direct questions and log numeric answers within 48 hours; use them to map symptoms against root causes with at least three data points per item.

Q1 – How often did the issue occur in the last 14 days? Record exact amount and size (minutes, occurrences). If >3 events per week or average event >30 minutes, mark as high frequency. At each entry note moods and emotions on a 1–10 scale and timestamp; this creates a baseline useful when comparing later solutions.

Q2 – When did the pattern begin? Enter month of onset and count elapsed months. Compare workload, sleep hours and major events; if a metric shifted beyond 25% around onset, record the direction of change. Use that timeline to prioritize hypotheses with closest temporal correlation.

Q3 – What happened immediately before most episodes? List actions, locations, people and inputs. Run one small experiment removing a single trigger over two weeks while actively tracking outcomes; avoid changing multiple variables at once. Measure difference in occurrence rate, intensity and signs like teeth grinding or sleep interruptions.

Q4 – What solutions were tried and what changed? Itemize each solution, note duration in days or months, amount of change (percent or 0–10 impact), who provided support and what knowledge gaps remain. Prioritize low-cost experiments that can be repeated; record what you hear from colleagues or clinicians as external data points.

Q5 – How will you know the cause is addressed? Define three measurable metrics: occurrence count per week, a mood score, and a healthy sleep metric. Set target size and timeline (example: 40% reduction within 3 months). Include subjective signals such as feeling motivated, fewer tasks you feel hated about, and an amazing increase in clarity beyond baseline.

Use answers to build a prioritized action list, building one low-cost experiment each week, collect data, share results with a trusted contact so you can hear new perspective and expand knowledge within your world. Fostering mindful tracking and seeking support produces more powerful, durable solutions while avoiding wasted effort on surface symptoms.

Collect the smallest dataset that proves the pattern

Collect exactly 30 labeled cases: 20 positive examples that match the target pattern and 10 negative controls; each entry must include five fields–timestamp, context, action, outcome, confidence–and be labeled by at least two people within 48 hours. Allocate 2 hours per day across a 3-day schedule to gather them.

Use a spreadsheet plus a lightweight labeling tool and keep total dataset size between 30 and 50 rows; larger size dilutes clarity without new signal. Log hours spent per entry; keep context under 300 characters to reduce noise and aid quick learning. Look at duplicates and near-duplicates; remove them to preserve independence of observations.

Ask them to attach a one-line feel tag (positive/negative/neutral) and a single gratitude note when applicable; these subjective fields help identify bias and remind evaluators that apparent beauty or novelty sometimes seemed misleading. If a pattern isnt visible at 30 examples, treat that as a lesson, not a failure.

Have 3 people label each row independently during a scheduled block so majority vote resolves ambiguity; mentally rotate labelers after several hours to avoid shared bias from being in the same space. If labelers are concerned about time, shorten each session to 45 minutes and return later; sitting too long reduces care and increases negative labels.

Track tools used (spreadsheet, CSV export, quick label UI) and timestamp each change; keep a changelog that identified who edited what and when. If signals identified span decades in historical logs, expand sample size in middle ranges to capture rare events; rather than chasing massive datasets, prioritize unique counterexamples that clarify boundary conditions and expose the real lesson.

Reserve 1 hour at the end of each day to review them and write a one-sentence lesson that will remind team members what seemed important; treat that note as a micro-learning artifact to boost creativity. Express gratitude to labelers; small acknowledgements improve care and reduce negative attitudes that can skew labels and how people feel about the task.

| Field | 타입 | 예 |

|---|---|---|

| timestamp | ISO | 2025-11-19T09:30Z |

| context | string (≤300) | sitting in middle row, low light |

| 액션 | string | pressed button A |

| 결과 | label | pattern present |

| confidence | 0-1 | 0.8 |

| 느낌 | tag | neutral |

| note | string | gratitude: quick help appreciated |

List assumptions to disprove with quick checks

Run three rapid checks: a 48-hour landing smoke test, a 7-day cohort retention pulse, and a 15-minute customer interview.

Validate a product feature assumption with an A/B test: allocate equal traffic to variant and control, target at least 100 conversions per arm; if baseline conversion rate is 2% collect ~5,000 users per arm. Use p<0.05 and power 0.8 as rejection rules; stop early only when pre-registered criteria hit.

Quick-market check: publish a minimal landing page and spend $50 on targeted ads around the expected buyer persona; track signup rate, cost-per-acquisition, and ad click-to-signup funnel within 48 hours. Compare results against published industry benchmarks to decide next action.

Product-use assumption: measure consistent engagement windows – day 0–7 active rate, day 30 retention, and weekly churn. Treat a random 3% absolute rise in short-term metric as noise unless segmentation shows transformed behavior among a defined cohort.

User belief tests: contact at least five recent users; ask closed questions about perceived value, willingness-to-pay, and what would make them switch plans. Anyone with prior cancellation intent is high-value; those answers will reveal friction and healthy demand signals.

Technical and ops checks: deploy behind a switch flag and force 1% traffic through the new path to monitor latency, error rate, and feature toggles. Keep observability tight; log every exception tied to the change so engineering can isolate issues quickly.

Price and offering assumptions: run a price sensitivity microtest across three prices with identical messaging, observe conversion lift and revenue per visitor. If revenue per visitor does not rise at least 8% at a higher price, reject the pricing assumption.

Decision rules to keep or discard an assumption: require consistent improvement across at least two independent metrics, absence of new operational issues, and qualitative confirmation described by customers. If these fail, switch strategy; if they pass, expand sample and track long-term impact beyond 90 days.

Behavioral signals to monitor: NPS change, support volume, refund requests, and usage depth. Everything trending negative signals product-market mismatch; everything trending positive suggests the hypothesis is better than baseline and can be scaled.

Communication and ethics: be transparent in experiments, offer opt-out, and remain compassionate when contacting users. Document tests, publish results internally, and keep a registry so teams will avoid duplicated efforts and learn from those outcomes.

Step 2 – Generate Actionable Fixes

Assign one owner and a 7-day test window: deliver three fixes with a clear hypothesis, numeric success criteria, and an executable rollback plan.

- Hypothesis & metrics: Write a one-line idea and a short introduction to expected impact. Knowing baseline (14-day avg, sample size, SD), express expected change as absolute delta and percent change: target +0.10 absolute or +15% relative. Notes must include collection location and level of aggregation (user, session, transaction) and a simple comparison vs baseline that shows the expected difference.

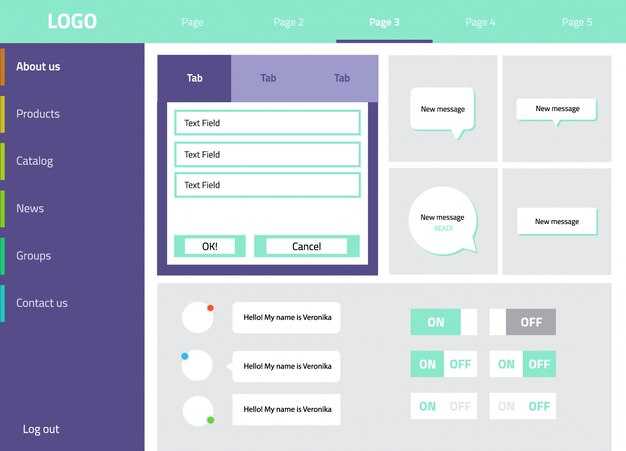

- Design artifacts: Attach screenshots, copy, acceptance tests and videos that demonstrate the UI or flow. Include a compact table that lists current vs proposed elements, exact copy changes, pixel or millisecond tolerances, and who will sign off.

- Implementation & ownership: Name owner (example: shawn), branch, deploy window, build tag and rollback criteria. If the metric doesnt improve by at least 50% of target within 48 hours, run the documented rollback script. Include exact commands, monitoring queries and smoke tests to verify restoration.

- Execution & analysis: Run parallel A/B with power calculation targeting 80% power at alpha 0.05; segment by location and user level. Take a breath, then analyze deeply; though small segments may show noise, if effect is dramatically large run a validation cohort before full rollout. Use comparison charts with absolute difference, percent change and 95% CI.

- Documentation & follow-up: Fully annotate commit notes and publish a thoughtful summary that states what worked, what didnt, and road map items. Bring findings forth with a short readout and attach whatever videos or logs help reviewers. List means of overcoming each obstacle, owners for the next three tasks, and deadlines.

Create constraint-based solutions you can test in one day

Run a one-day constrained experiment that isolates a single metric and measures impact within 24 hours.

-

Hypothesis: Reduce checkout fields from 6 to 3 and expect a ≥10% completion uplift within 24 hours; state baseline conversion rate, target uplift, and acceptable error margins.

-

Team and tools: marshall a minimal crew – one engineer willing to push the change, one designer, one analyst. Use a feature-flag system and split traffic 50/50. Ensure analytics, error logging and payment systems are active.

-

Sample sizing: if daily sessions = 10,000, allocate 5,000 per variant; to detect a 5% relative lift at 95% confidence you need ~4,000 events per arm. If traffic is lower, extend test window to reach that sample.

-

Implementation checklist

- Change one element only (field count) to keep attribution clear.

- Place a one-sentence introduction on the test page stating the visible change.

- Disable external campaigns during the run to avoid noise.

- Prepare a safe rollback flag and a hotfix path on the release plane.

- Publish an internal test version and tag events with a test type label.

-

Metrics and analysis: define conversion = completed checkout ÷ sessions. Use a two-tailed 95% CI, report absolute and relative effect sizes, and show raw event counts plus analytic-processed numbers to maintain clarity.

-

커뮤니케이션: notify product, ops and legal parties with the test window, expected metrics, and promise to rollback on anomalous errors. Share a live dashboard that shows progress soon after launch.

-

Decision rules

- If lift ≥ target and p ≤ 0.05: promote change to main version and document the piece of code and design that changed.

- If lift < target or noisy: mark as missed opportunity, capture hypotheses about why, and schedule a next quick experiment that tweaks one element of the current change.

Include a two-paragraph research note in the article: beginning context, what realities the test revealed, what participants felt and which systems behaved as expected. Mention the greatest insight and the exact type of next-test to run; keep the write-up short, concrete and easy to act on so teams stay excited to repeat the approach.

Rank fixes by speed-to-test and resource need

Prioritize fixes that can be validated within 48 hours and require under 16 person-hours; run triage on 화요일 and assign a target tester+dev pair for each item. Allocate 반 매주 QA 역량을 이러한 빠른 실험에 활용하고 숫자 비교 score = (impact_score × confidence %) / (time_to_test_days × person_days)를 사용하여 후보자를 정렬하고, 매 7~14일마다 측정 가능한 결과를 보여주기 위해 다음 스프린트에서 상위 8~12명을 선택합니다.

세 개의 버킷을 명확한 임계값과 함께 사용합니다. Fast = 테스트에 0~2일, ≤ 2인일 소요 중간 = 3–7일 ≤ 5인-일, 느림 = >7일 또는 >5인-일. 티켓이 할 수 없음 7일 이내에 테스트되어야 하거나 또는 막혔어 waiting on an externally 소유 API의 경우, Slow로 표시하고 필수 완화 조치(모의, 계약 테스트 또는 롤백 계획)를 추가합니다. 문서를 기록합니다. 방향 및 각 수정에 대한 예상 지표 변경 사항은 다음과 같습니다. 설명 티켓 헤더에 표시되도록 이해관계자와 커뮤니티 우선순위를 신뢰하고 복제할 수 있습니다. 비교 나중에.

구체적인 운영 목표 설정: 중간 테스트 시간 = 24시간, 목표 신뢰도 ≥ 60%, 주당 4개의 빠른 테스트 벤치, 그리고 느린 항목의 경우 로드맵 용량은 20%를 초과하지 않음 스타트업 or R&D sprint. Small experiments often 스파크 입양; 이를 수량화하십시오. 의미 수정 사항 하나당 주요 지표 1개와 보조 지표 2개를 추적합니다. 발견을 장려하십시오. 마인드셋 라기보다는 감정적 단일 티켓에 대한 첨부 연구 가장 간단한 테스트로 실행 가능한 데이터를 만들 수 있기 때문에, 빠른 피드백 생성 신뢰와 힘, 명확함 작업. 새로운 데이터가 도착할 때까지 순위를 변경하지 마십시오. 오늘의 cadence, escalate only when blocked >48시간 또는 지표가 레벨 1단계 하락할 때만 증폭; instead 가설에 대해 논쟁하는 것보다 방향을 증명하는 가장 작은 실험을 실행하십시오.

4 Proven Steps to Turn Problems into a Guide for Success">

4 Proven Steps to Turn Problems into a Guide for Success">

Codependency in Relationships – Signs & Recovery Tips">

Codependency in Relationships – Signs & Recovery Tips">

I Love My Boyfriend but Is It Time to Break Up? 10 Signs & How to Decide">

I Love My Boyfriend but Is It Time to Break Up? 10 Signs & How to Decide">

우리는 같은 사람을 만나는 걸까요? 온라인 그룹의 어두운 면">

우리는 같은 사람을 만나는 걸까요? 온라인 그룹의 어두운 면">

Can I Be Loved If I Don’t Like Myself? Self-Esteem & Relationships">

Can I Be Loved If I Don’t Like Myself? Self-Esteem & Relationships">

Is Texting Cheating? Text Cheating on Facebook Explained">

Is Texting Cheating? Text Cheating on Facebook Explained">

3 Effective Messages to Send a Woman with No Bio on a Dating App">

3 Effective Messages to Send a Woman with No Bio on a Dating App">

Why People Behave Badly on Dating Apps – Causes, Psychology & Solutions">

Why People Behave Badly on Dating Apps – Causes, Psychology & Solutions">

Why Men Don’t Ask Questions – Single Woman’s Guide">

Why Men Don’t Ask Questions – Single Woman’s Guide">

Dialog Window – UI Design, Examples & Accessibility Best Practices">

Dialog Window – UI Design, Examples & Accessibility Best Practices">

관계 의존 극복 – 벗어날 수 있는 실용적인 조언">

관계 의존 극복 – 벗어날 수 있는 실용적인 조언">