Social Importance: Public Safety and Ethical Considerations

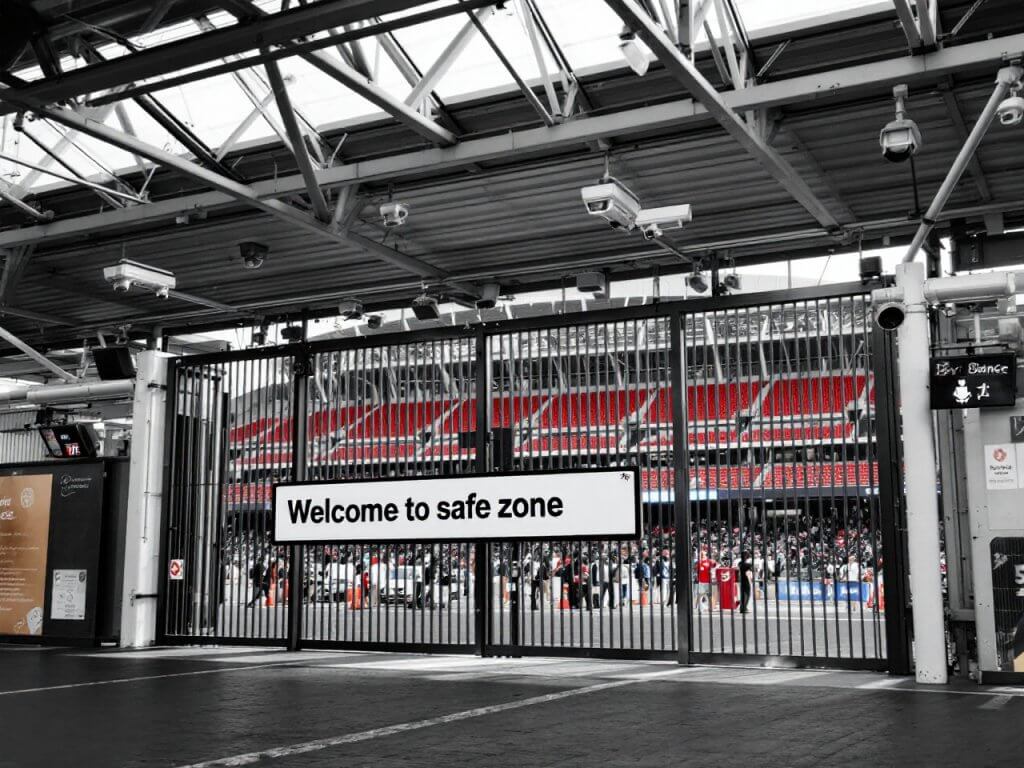

Enhancing Public Safety: Large public venues face growing safety challenges from fan violence, terrorism, and unruly behavior. Traditional security measures (guards, metal detectors, CCTV) often react to incidents rather than prevent them. The PSY Visor Cam introduces an AI-driven psychotype screening system to proactively identify individuals who may pose a threat before an incident occurs. Security experts emphasize that such proactive behavior threat detection is a “proven method for preventing mass violence, sabotage, and other harmful acts,” allowing staff to get “one step ahead of the aggressor” . By analyzing subtle psychological and behavioral cues (e.g. facial expressions, body language, micro-signals of agitation), PSY Visor Cam can alert personnel to potentially aggressive or unstable individuals in real time. This capability augments conventional security – for example, spotting an agitated fan without a weapon who might start a fight, or identifying a person exhibiting signs of extreme stress or narcissistic hostility even if they have no criminal record. In effect, PSY Visor Cam acts as an ever-vigilant observer, helping security teams prevent incidents rather than just respond to them. This enhances public safety by enabling early intervention (e.g. friendly de-escalation by staff or additional screening), potentially averting brawls or worse (such as an attacker reaching their target). The social value is clear: safer environments at sports games, concerts, and nightclubs mean a more enjoyable experience for the public and fewer tragedies on the news.

Ethical Guardrails: Introducing AI psychometric screening in public venues raises serious ethical questions. Venue operators must ensure that profiling is based on behavior and risk indicators – not on race, ethnicity, or other protected traits. PSY Visor Cam’s algorithms should be trained to recognize universally relevant cues of aggression or high-risk mental states while avoiding biases. It is crucial that any flag generated by the system is interpreted and verified by human security staff, not treated as automatic guilt. (Notably, some vendors like Oddity.ai design their aggression-detection AI to support a “human-in-the-loop” approach and not identify individuals’ faces , thus maintaining human judgment and privacy in the process.) Soulmatcher’s solution must similarly serve as a decision-support tool for trained security personnel, rather than a dystopian judge. Transparency with the public is also key to ethical deployment: venues should inform attendees that an AI safety system is in use, what it looks for, and how data is handled. This openness can build trust and demonstrate that the goal is public safety, not intrusive surveillance. Engaging independent experts (in security ethics, psychology, and AI) to audit PSY Visor Cam’s algorithms would further validate that its psychotype assessments are evidence-based and avoid unjust profiling. Encouragingly, law enforcement advisors note that behavior detection methods have been “rigorously field-tested” over decades and can be highly effective when integrated properly, especially as part of a broader security strategy . By adhering to best practices, PSY Visor Cam can align with ethical norms and even set a new standard for responsible AI use in public safety.

Privacy and Legal Compliance: Any system analyzing personal characteristics at venue entrances must strictly comply with privacy laws in Western jurisdictions. In the European Union, GDPR classifies biometric and psychometric data as sensitive personal data, meaning its use requires a strong legal basis and safeguards. Simply obtaining consent may not be sufficient. For example, a Spanish football club (Osasuna) was fined €200,000 under GDPR in 2024 for implementing an optional facial recognition entry system – even though fans had to pre-register and consent, regulators ruled the club failed to show the biometric processing was truly necessary and proportionate . This case underscores that PSY Visor Cam’s deployment must satisfy the EU’s strict principles of data minimization, necessity, and proportionality. In practice, that means the system should collect only the data needed for security (e.g. real-time risk scores without storing identifiable images long-term), use it only for security purposes, and prove that no less-intrusive method would achieve a comparable level of safety. Soulmatcher should conduct Data Protection Impact Assessments and possibly seek approval from data protection authorities before European rollouts. Technical measures can bolster compliance – for instance, performing on-device analysis without uploading video to the cloud, blurring or immediately discarding faces that are not flagged, and ensuring “privacy by design” as Oddity.ai does (their violence detection is anonymized and stores no personal identifiers, operating fully within GDPR guidelines ). In the United States, privacy laws are more fragmented: there is no federal GDPR equivalent, but states like California (CCPA/CPRA) and Illinois (BIPA) impose requirements. CCPA/CPRA would require venues using PSY Visor Cam to disclose the data collection in their privacy policies and uphold rights like access and deletion for California residents. BIPA (Illinois’ Biometric Information Privacy Act) mandates prior consent for biometric data capture and has stiff penalties for non-compliance – a cautionary tale being the Six Flags amusement park, which faced legal action for fingerprinting guests without proper consent . Thus, in Illinois or similar jurisdictions, explicit opt-in from ticket holders might be needed before using PSY Visor Cam. In the UK, data protection follows GDPR standards (UK-GDPR), so similar care is needed. Overall, PSY Visor Cam’s design and policies must prioritize privacy at every step: informing attendees, securing data with encryption, limiting access to outputs on a need-to-know basis, and purging data routinely. By doing so, the system can achieve its safety mission while respecting individual rights, turning a potential public concern into public support. When people see that the system operates fairly and transparently within legal boundaries, they are more likely to embrace the added security it provides.

Expert and Public Support for AI Screening: Despite understandable privacy worries, there is a growing acknowledgment among security professionals that AI-assisted screening is necessary to safeguard crowded venues in modern times. Human vigilance alone cannot effectively monitor tens of thousands of spectators – crucial warning signs get missed. Advanced algorithms never tire or look away, making them ideal “eyes” to assist human guards. For instance, Dutch police have for years trusted an AI system to watch CCTV feeds for violent behavior and alert officers in real time . That system, Oddity.ai, even avoids capturing faces and still manages to catch brawls and assaults as they happen, underscoring that well-designed AI can enhance safety without trampling privacy . Security consultants also note that behavioral threat detection, whether by trained personnel or AI, acts as a strong deterrent – if would-be troublemakers know they are likely to be spotted before they act, many might think twice . This deterrence and early intervention can protect not only patrons but also staff like security guards (who often bear the brunt of violence) and even the potential offenders themselves from harm. Ethically deploying PSY Visor Cam could thus be framed as an act of social responsibility: it aims to prevent violence, reduce injuries, and promote a culture of safety in public gatherings. Academics and psychologists are increasingly supportive of using behavioral cues for threat assessment, as long as the systems are properly validated. In an era of frequent mass events and the ever-present risk of lone-wolf attackers or sudden crowd panic, the social importance of proactive screening cannot be overstated. PSY Visor Cam’s psychotype analysis might identify, say, an individual exhibiting signs of extreme stress and hostile ideation entering a packed arena – an opportunity to discreetly pull them aside for a conversation or additional checks, potentially averting a nightmare scenario. When communicated effectively (e.g. “This venue uses advanced AI safety screening to protect everyone.”), such measures can also reassure the public. In summary, PSY Visor Cam addresses a critical public need: keeping venues safe in a way that is intelligent, preventive, and aligned with democratic values. By coupling innovative AI with ethical oversight, it justifies itself as not only a tech product but a public safety initiative endorsed by experts in security and psychology.

Market Analysis: Biometric and AI Security for Venues in Europe, UK & US

Booming Demand for Smarter Venue Security: Across the European Union, United Kingdom, and United States, the market for biometric and AI-powered security systems at venues is expanding rapidly. Venue operators are investing heavily in technologies that can strengthen access control and threat detection, driven by both security concerns and the desire to improve fan experiences. The global stadium security market (covering surveillance, access control, etc.) was valued around $6.24 billion in 2017 and is projected to reach $16.06 billion by 2025, a robust ~12.8% annual growth rate . North America alone comprised roughly 40% of that market in 2017 , and Europe also represents a significant share, indicating that Western venues are at the forefront of this growth. More specifically, biometric technologies are becoming a top priority: one recent industry survey found that almost half of live event venues (about 47%) rank biometrics as a top initiative for 2025 . This reflects a major shift in security strategy – moving beyond metal detectors and ticket scanners toward facial recognition entry, fingerprint or palm-vein ticketing, AI video analytics, and more. The overall biometrics market in Europe (across all sectors) was about $12.4 billion in 2024 and is forecast to triple to $39+ billion by 2033 , with secure identification and access control as key drivers. A substantial portion of this growth will come from applications in large venues, where verifying identities and screening crowds efficiently is both a logistical challenge and a safety imperative.

Venue Segmentation and Scale: The target market spans a variety of venue types, each with its own scale and security needs:

- Stadiums (Sports Arenas): This includes open-air or large enclosed stadiums used for sports like football (soccer), rugby, American football, baseball, etc. Europe is home to hundreds of professional football stadiums – for instance, the top-division clubs across UEFA countries easily number over 200, and that’s not counting lower leagues or national team venues. The UK alone has dozens of major football grounds (from 90,000-seat Wembley down to 20,000-seat club venues), and the US boasts sizable stadiums for NFL (32 teams), MLB (30 teams), and major college football (100+ large stadiums) among others. By one estimate, Europe actually has more large stadiums than the US (on the order of 1,200 vs 844, considering all sizes) , highlighting the extensive footprint. Stadiums typically host tens of thousands of people at a time, and security spending is correspondingly high – large stadiums can spend millions per year on security staff, fencing, cameras, and control room operations. In recent years, “smart stadium” upgrades are common, bundling high-speed connectivity with advanced security screening and analytics. The result: many stadium owners are actively evaluating biometric gates and AI surveillance. For example, UEFA Champions League and other international matches have prompted stadiums to tighten entry controls to prevent hooliganism and terror threats, sometimes even under government pressure. This is leading to pilot programs of facial recognition at turnstiles and AI monitoring of crowds. The adoption trend is clear: a growing number of stadiums in the EU and US have either tested or implemented biometric ID for fans, staff, or VIPs – and those who haven’t are watching closely. Industry data shows 37% of venues already use biometrics in some capacity (mostly for staff entry or media access) and many more plan to start . With on-going incidents of pitch invasions, fights in stands, or gate-crashing, stadium operators see AI-based screening as a way to bolster safety without hiring hundreds more personnel. We can expect the stadium segment to be one of the most fertile markets for PSY Visor Cam, given these trends and the sheer volume of potential end-users (the security teams of thousands of stadiums across the Western hemisphere).

- Arenas and Concert Halls: These are indoor venues typically ranging from 5,000 to 25,000 seats used for basketball, hockey, concerts, and other entertainment events. In the US, many NBA and NHL teams play in such arenas (often around 20,000 capacity), and in Europe, there are numerous multi-purpose arenas (the O2 in London, Accor Arena in Paris, Mercedes-Benz Arena in Berlin, etc.) that host concerts and sports like indoor tennis or EuroLeague basketball. There are also iconic concert halls and theaters for performing arts that, while smaller, host high-profile events. Venue counts: The US has on the order of 150+ major arenas when considering pro sports and large concert venues; Europe similarly has hundreds of arenas and large theaters spread across its countries. Security concerns here are as intense as in stadiums – a tragic reminder was the 2017 Manchester Arena bombing in the UK, which underscored vulnerabilities at concert venues. Consequently, even mid-sized arenas are ramping up screening at entrances (bag checks, magnetometers, and now exploring biometric ticketing to eliminate paper tickets and fraud). Annual security expenditures vary by venue size but can still be significant (multi-million budgets for the largest arenas, especially when hosting big events). Adoption trends: Many arenas are following in the footsteps of stadiums. For instance, several NBA arenas in the US have partnered with biometric companies to offer face-recognition ticket lanes or palm-scan payment for concessions. In the EU, arenas used in international competitions (like those for the EuroBasket tournament or Eurovision Song Contest) have trialed facial recognition for accrediting staff and performers. The COVID-19 pandemic accelerated some of this, pushing venues to seek contactless entry solutions, which made technologies like face or palm recognition appealing for hygiene as well as speed. Going forward, arenas are likely to integrate AI surveillance inside the venue too – e.g. monitoring crowd density to prevent crushes, detecting fights in the stands, or even identifying VIPs for service. PSY Visor Cam’s psychotype screening could add value here by scanning for potentially aggressive attendees in general admission areas or spotting signs of panic in a crowd (allowing early intervention in case of dangerous overcrowding or a fire).

- Nightclubs and Entertainment Venues: Nightclubs, music venues, and large bars/pubs constitute a different segment – typically smaller capacity (a few hundred to a few thousand patrons) but often densely packed and prone to altercations. Major cities in Europe and the US each have dozens of popular clubs, and collectively the number of nightlife establishments is in the many thousands. While each venue is smaller than a stadium, the aggregate market and need for safety is huge. Nightclubs face issues like brawls, sexual harassment, drug-related incidents, and occasionally worse (some terror attackers have targeted nightclubs, as seen in Paris 2015 or Orlando 2016). Traditionally, clubs rely on bouncers checking IDs and watching for trouble, but now some are turning to tech. In the UK, for example, facial recognition is making inroads at club entrances – certain London clubs have begun using face scanners to compare guests to a watch-list of known troublemakers or underage patrons . A French report noted that in London this technology, which tracks “undesirable” individuals, is “increasingly common in…nightclubs.” . Companies like Facewatch in the UK provide a shared database of individuals banned for violence or shoplifting, and clubs install Facewatch’s cameras to automatically alert if a flagged person tries to enter . In the US, upscale clubs in cities like Las Vegas or Miami have experimented with biometric ID checks for VIP entry. Spending & adoption: Nightclubs generally have smaller tech budgets, but chains or high-end venues are willing to invest in systems that can prevent headline-grabbing incidents. The trend is nascent but growing – as costs of AI cameras fall, even mid-sized bars can deploy systems that previously were only in casinos or airports. PSY Visor Cam could tap this segment by offering a solution that integrates with existing club camera setups, giving security staff an AI assistant to flag escalating tensions on the dance floor or identify a patron whose behavior (e.g. erratic movements, expression suggesting anger or predatory intent) merits attention. Because clubs operate in a more privacy-sensitive context (patrons expect a degree of anonymity), the system’s compliance and accuracy will be paramount here. Yet, if it can demonstrably reduce fights or assaults, insurers and regulators might encourage its use in nightlife districts. Notably, some city police departments in the West (e.g. in the UK) have run trials of live facial recognition in busy nightlife areas to catch wanted criminals, indicating official interest in tech-assisted monitoring . This suggests that private venues adopting internal AI screening is a plausible next step.

Spending Estimates: Quantifying the annual spend on these technologies per segment: a large stadium or arena might spend anywhere from $1–5 million yearly on security operations (personnel, equipment, cybersecurity, insurance, etc.), of which an increasing portion is allocated to high-tech systems. As evidence, the stadium access control market (a subset of security tech focusing on entry systems) was about $5.7 billion in 2024 globally and projected to double by 2034 – much of that growth comes from biometric entry gates and digital identity systems. Within Europe and North America, sports venues and concert organizers are key buyers driving this trend. We also see governments and city councils giving grants or funding for security upgrades in publicly-owned venues, especially after security incidents. Adoption rate trends: By 2025, a sizeable minority of Western venues will have implemented some form of biometric or AI screening. To illustrate, 70% of venues surveyed have adopted walk-through metal detectors or “smart” security scanners, and about 14% were already using biometric ticketing for fans as of 2024 . These numbers are expected to rise year over year, especially as early adopters demonstrate the benefits (speed, security) to others. In summary, the market opportunity for PSY Visor Cam is expansive and growing: across the EU, UK, and US there are thousands of potential venue clients, collectively spending billions on security upgrades. The momentum is clearly toward AI-enhanced, biometric-enabled venues, creating a favorable environment for an innovative psychotype screening solution to find receptive customers.

Competitive and Technology Landscape (EU/UK/US Focus)

The security technology landscape for public venues is dynamic, with numerous players offering solutions from biometric identification to AI behavior analytics. Below is an overview of key categories of competitors and where PSY Visor Cam fits:

1. Facial Recognition and Biometric Access Systems: These systems are perhaps the most widely known in venue security. They focus on verifying or identifying individuals using unique biological traits – most commonly faces, but also fingerprints, iris scans, or even hand veins. In the Western markets, notable companies include NEC Corporation (whose facial recognition tech is used in systems like MLB’s Go-Ahead entry gates ), Wicket (a startup specializing in facial authentication for sports venues), Clear (a U.S.-based firm known for airport biometric kiosks, now operating express lanes at stadiums), Veridas (a Spanish provider of face biometrics, used by Osasuna’s stadium ), Idemia (French global leader in biometric solutions, supplying everything from border control to event access systems), and Facewatch (UK-based, focusing on retail and hospitality watch-lists). What they offer: These systems excel at fast and secure entry – e.g. replacing tickets or ID checks with a face scan. In Major League Baseball, facial recognition gates by NEC allowed fans to enter 2.5× faster than using paper or phone tickets, with lines moving 68% faster in a pilot . Wicket’s facial ticketing has been adopted by teams like the Cleveland Browns, Atlanta Falcons, New York Mets, and Tennessee Titans , and the NFL chose Wicket for a league-wide rollout of biometric entry for staff and media in 2024 . Similarly, in Europe, Italy’s top soccer league (Lega Serie A) has plans to implement facial recognition at all stadiums for security and access control . These examples show that facial biometrics are becoming mainstream in venue operations. How PSY Visor Cam differs: Most facial recognition systems match a face against a known database (e.g. a season ticket holder list, or a watch-list of banned fans). They are about confirming identity or denying entry to specific persons. PSY Visor Cam, in contrast, analyzes facial and body cues to assess personality and psychological state, irrespective of identity. It doesn’t need a pre-existing photo of a person to evaluate them. This means PSY Visor Cam can flag an unknown individual who exhibits a high-risk psychotype (for example, signs of aggressive narcissism or emotional volatility) even if that person has never been in trouble before. It’s a complementary function to traditional FR: while FR might tell security “this guest is Alice, a VIP” or “this person is on the banned list, stop them,” PSY Visor would say “this guest – we don’t know name – is exhibiting concerning behavior or traits, pay attention.” Notably, PSY Visor Cam could integrate with FR systems: if FR identifies someone and PSY Visor flags them, that’s a strong signal to intervene. Conversely, if PSY Visor flags someone who is not on any watch-list, security might discreetly engage to prevent an issue. Thus, PSY Visor Cam’s value lies in going beyond known identities to detect latent threats among the general crowd. It’s also worth noting that pure FR systems have faced pushback over privacy (scanning and storing faces), whereas PSY Visor Cam could be configured to analyze attributes without permanently recording identity – positioning it differently in terms of data handling. Nonetheless, in the competitive landscape, some might superficially bucket PSY Visor Cam with “facial recognition” tools, so Soulmatcher should clarify its unique approach when pitching to clients concerned about privacy or legality.

2. Biometric Ticketing and Fan Experience Platforms: Beyond security per se, some competitors emphasize seamless fan experience using biometrics. For instance, Clear (in the US) not only offers fast entry but ties that to concessions: at 13 of 30 MLB ballparks, Clear’s biometric platform lets fans enter with a fingerprint scan and then buy beer or hotdogs with the same biometric linkage to their credit card . In Europe, startups like Palmki and Perfect ID trialed palm-vein scanning for ticketless entry in a Belgian stadium . These solutions blur the line between security and convenience – they aim to reduce queues and fraud while adding a “cool factor” for fans. Adoption: Major sports leagues have embraced these; MLB’s “Go-Ahead” facial entry system and the NBA’s testing of biometric payments are examples. While not direct “competitors” to PSY Visor Cam in functionality, they compete for the same budget. A venue might choose to spend on faster entry systems rather than on threat assessment tools if it prioritizes convenience. PSY Visor Cam’s relation: Soulmatcher can position PSY Visor Cam as addressing a different need – it’s not about ticketing or payment, but about safety and risk management. However, it can complement fan experience initiatives: a safer venue ultimately means a better experience. Also, PSY Visor Cam could integrate with these platforms by using their biometric identification as additional input (e.g., if Clear identifies a fan and PSY Visor Cam indicates that fan is extremely anxious, perhaps security could offer assistance or ensure they’re not carrying something suspicious – turning a customer-service lens on it). Another distinction is that many fan experience systems require pre-enrollment (fans opt in by registering their biometric), whereas PSY Visor Cam does on-the-fly analysis of everyone. This means PSY Visor potentially covers 100% of attendees, whereas opt-in systems typically cover a smaller subset (the willing early adopters). Thus, PSY Visor Cam could be marketed as the solution that casts the widest safety net over the entire crowd.

3. AI Video Analytics – Behavioral Threat Detection: This category is closely aligned with PSY Visor Cam’s core functionality. A number of companies and research groups are developing AI that analyzes live camera feeds to detect abnormal or dangerous behavior. Aggression detection is a prime focus: algorithms can now recognize patterns of violence (like throwing punches, wrestling, or kicking) in real time. One notable player is Oddity.ai (Netherlands), whose software monitors CCTV feeds and raises an alarm the instant a fight or assault is detected. Dutch law enforcement has used Oddity’s system in city centers and even prisons, relying on it to catch violent acts that human operators might miss . Importantly, Oddity’s system is designed with privacy in mind – it does not identify faces or individuals; it purely looks for violent actions, operating within GDPR guidelines . This shows a possible model for PSY Visor Cam: focus on behavior, skip identity. Other companies in this space include Scylla (US), which offers “aggressive behavior detection” as part of its suite of AI security analytics , and Athena-Security or Actuate which have marketed violence recognition for schools and workplaces. Additionally, some established CCTV manufacturers (like Axis, Hikvision, or i-PRO) have begun building aggression or scream-detection analytics into their cameras, often via partnerships. Adjacent technologies: Also worth noting are weapon detection AI (e.g. ZeroEyes in the US focuses on detecting firearms on cameras in schools and events ) and anomaly detection systems that look for unusual movements or gatherings (useful for spotting a crowd rush or someone sneaking into a restricted area). PSY Visor Cam’s differentiation: Traditional aggression detectors like Oddity.ai trigger alarms when violence has started – essentially when a punch is thrown or a brawl breaks out, the system flags it so security can respond faster. PSY Visor Cam aims to fill an earlier part of the timeline: identifying pre-violence indicators and high-risk individuals before anyone throws a punch. It’s akin to the difference between a smoke detector and a fire prevention system; one alerts you when something bad is happening, the other tries to stop it from happening at all. Psychotype screening might detect that a particular patron has a volatile mix of traits or is in an agitated emotional state, suggesting they are more likely to initiate violence if provoked. Security could then keep an eye on that person or even engage them in a friendly way to defuse any anger. This preventive capability is quite unique. One could argue that well-trained human observers do this intuitively (e.g. an experienced bouncer can often spot the “troublemaker” in a bar by demeanor), but doing it at scale in a huge venue is where AI like PSY Visor Cam shines. In essence, PSY Visor Cam extends the concept of behavior detection from just recognizing actions (like throwing a punch) to assessing propensities (who is likely to throw a punch). There are few direct competitors doing personality or intent analysis via camera in Western markets – one example is Faception, an Israeli startup that claimed its facial analysis could identify terrorists or pedophiles by revealing personality traits . Faception’s approach stirred controversy and skepticism (as it touches on phrenology-like territory), and it’s not widely deployed. Soulmatcher’s PSY Visor Cam will need to scientifically substantiate its accuracy and avoid over-claiming. If it can do so, it would stand largely alone in offering a real-time psychometric evaluation tool for venue security. The competitive edge here is being first-to-market with a system that goes beyond what the eye can see, whereas other AI solutions are still reacting to obvious visual events. Of course, PSY Visor Cam can integrate with those systems too – for example, if a weapons-detection AI flags someone for a gun and PSY Visor Cam also flags them for extreme stress, that’s a red-alert situation. Or vice versa: PSY flags someone and that prompts security to do a secondary screening where a weapon is then found. Such layered defense is exactly what high-security venues (like airports or government buildings) use; now entertainment venues are trending that way as well.

4. Traditional Security Firms and Integrators: It’s important to mention that many venues rely on large security integrators or IT firms (like Honeywell, Johnson Controls, Siemens, etc.) that bundle various security solutions into one package. These incumbents might not develop all tech in-house but will partner or acquire solutions. For instance, a stadium might contract a firm to install an integrated system with CCTV, access gates, and analytics software from multiple vendors. PSY Visor Cam should be prepared to enter this ecosystem, potentially by partnering with such integrators or getting listed as a compatible add-on in venue security management platforms (much like Oddity.ai integrated its detection into Milestone VMS ). Competition here isn’t about one product vs another, but about being included in the overall security upgrade projects that venues undertake. Soulmatcher will need to articulate how PSY Visor Cam adds a layer that others don’t provide, and how easily it can plug into existing camera infrastructure (e.g. using standard CCTV feeds and outputting alerts to the control center software). Given that companies like NEC and Idemia are emphasizing compliance and accuracy in their facial recognition offerings for stadiums (marketing them as GDPR-compliant and highly reliable ), Soulmatcher should likewise emphasize PSY Visor Cam’s robust testing, low false-positive rate, and privacy compliance. Differentiating from competitors on those terms (not just on unique features) will be important to win trust, as security buyers tend to be conservative and risk-averse.

In summary, PSY Visor Cam occupies a novel niche at the intersection of biometric ID and AI behavior analytics. Competitors in the West offer pieces of the puzzle – identity verification, weapon detection, violence alarms – but none offers the same blend of psychological profiling for proactive threat prevention. PSY Visor Cam can thus be marketed as complementary to virtually all existing systems: it adds a critical layer of insight rather than replacing something. A venue could use facial recognition to catch known bad actors and PSY Visor to catch unknown ones; use metal detectors to catch weapons and PSY Visor to catch hostile intent. This complementary strategy means the presence of established competitors actually helps validate the need (venues already believe in tech for security) while PSY Visor Cam ups the game. Soulmatcher should keep a close watch on emerging tech (for instance, any academic breakthroughs in emotion AI, or new startups focusing on “intent detection”) but currently, it enjoys a head start in Western markets for this specialized capability. By highlighting interoperability, ethical design, and a unique value proposition, PSY Visor Cam can secure a strong position alongside the current generation of venue security solutions.

Adoption Dynamics and Momentum in the West

Sports Leagues Driving Adoption: In the Western hemisphere, major sports organizations and leagues are powerful catalysts for security innovation. They often set guidelines or launch initiatives that member teams follow. In Europe, UEFA (the governing body for European football) has a vested interest in stadium security, especially after incidents like crowd violence or pitch invasions that tarnish the sport’s reputation. While UEFA stops short of mandating specific technologies (given varying laws in each country), it actively encourages clubs and national associations to modernize safety measures. We’re seeing clubs in UEFA competitions trial advanced systems – for example, Spain’s Osasuna (as noted) tried facial recognition for access, and other La Liga clubs have explored similar tech. Italy’s Serie A making plans for universal facial recognition at stadiums is a direct response to chronic fan violence issues; if implemented, it could set a precedent that ripples out to UEFA Champions League matches and beyond . The English Premier League, known for passionate fans and occasional hooliganism, has so far been cautious (no club-level FR yet, partly due to UK privacy debates ), but even there, police have used mobile facial recognition vans at high-risk matches, leading to arrests of wanted individuals . This indicates that the security apparatus around soccer is warming to AI, and it’s plausible that within a few years, top clubs will adopt more biometric or psychometric screening to enforce stadium bans and improve safety. PSY Visor Cam could benefit from this momentum by aligning its value with league-wide initiatives: for instance, if UEFA or a national league funds a pilot on “enhanced crowd screening,” PSY Visor Cam could be a candidate solution. In the US, leagues like the NFL and NBA historically followed a bit later on such tech but are now charging ahead. The NFL, after years of trialing facial recognition for event staff, announced a league-wide deployment of biometric entry for the 2024 season , signaling full commitment to these tools. The NFL also has a clear interest in preventing fan violence (there have been high-profile fights in stands, and in some cases post-game violence). The league, along with venue owners, could see a system like PSY Visor Cam as a way to mitigate risks that lead to lawsuits or bad publicity. The NBA and NHL share many arenas, and with incidents like the “Malice at the Palace” (an infamous NBA brawl in 2004) lingering in memory, there’s an understanding that preventing unruly behavior is critical. In the realm of international sports events: the Olympics and FIFA World Cup have employed massive surveillance setups (e.g. the 2022 World Cup in Qatar used an array of cameras and facial recognition on spectators ), which tends to normalize these practices and make domestic leagues more comfortable with them afterward. As Western sports leagues prioritize a safe, family-friendly environment, they create a top-down push for venues to adopt advanced screening. PSY Visor Cam can be positioned as part of that cutting-edge toolset that leagues endorse to stay ahead of threats.

Event Organizers and Venue Owners: Outside of league mandates, the actual owners and operators of venues are key decision-makers. Many stadiums and arenas are owned by municipal authorities or private companies that host a variety of events (sports, concerts, exhibitions). These operators are increasingly investing in tech both to improve safety and to reduce operational costs. A prime example is Madison Square Garden (MSG) in New York – a privately owned arena that implemented facial recognition security throughout the facility. MSG made headlines for using FR to screen attendees against an internal watch-list (even banning certain individuals like litigation opponents), showing that the venue was confident enough in the technology to deploy it at scale. This case, while controversial, demonstrated the feasibility: a major Western venue running real-time face scans on thousands of guests. It also highlighted how insurers and liability concerns play a role: MSG and its sister venues likely use such measures to identify persons who might threaten security (e.g. previously violent attendees or, in MSG’s case, those they consider persona non grata). Insurers who underwrite these venues take note of improvements in security. In fact, security analysts have pointed out that robust measures like actively monitored cameras reduce risks of incidents and fraudulent claims, which can translate into lower insurance premiums for the business . A venue that can demonstrate it has an AI system watching for conflicts or threats might not only prevent a costly incident (saving lives and money) but also negotiate better insurance terms. For instance, event cancellation insurance or liability insurance could be cheaper if a venue has state-of-the-art screening that lowers the probability of a violent disruption. There is a parallel in the business world where companies get discounts for alarm systems or sprinkler systems – by analogy, venues might get incentives for AI security. At the very least, venue owners are driven by the desire to avoid lawsuits. In the US, if a fan is injured in a fight or an attack at a venue, they might sue the venue for inadequate security. Deploying tools like PSY Visor Cam provides an extra defense (“we did everything technologically possible to maintain safety”). We see an adoption dynamic where early adopters (like MSG, or the Seattle Seahawks’ CenturyLink Field which also used biometrics ) validate the concept, and then others follow suit to not be left behind. Many venues are now in a phase of technological refresh, coming out of the pandemic with new budgets for digital transformation. Security tech is a big part of that, often under the umbrella of “smart venue” initiatives. PSY Visor Cam can ride this wave by showing it integrates with the new digital ecosystems venues are building.

Concert and Festival Organizers: Beyond fixed venues, many major events (music festivals, large concerts at temporary sites, etc.) are adopting biometric entry and considering AI security. Companies like Live Nation/Ticketmaster have experimented with face recognition for concert entry (Ticketmaster invested in Blink Identity, a facial ID startup, a few years ago). While some of those plans were slowed by privacy outcry, the trend remains: organizers seek faster entry and better control. Music festivals in Europe like Tomorrowland (Belgium) or sports events like the UEFA EURO tournament attract huge crowds and could deploy psychotype screening at entrances as an added layer of defense against things like gate-storming or catching a potential aggressor in a crowd of revelers. Since these events often involve intense emotions (excitement, sometimes alcohol/drug influence), having AI that monitors for emerging threats or panic can be invaluable for crowd management. Real-world use case: one could imagine PSY Visor Cam at a festival alerting security when a group of individuals starts showing signs of panic or aggression, enabling staff to intervene or calm things before a stampede or fight breaks out.

Insurance and Liability Influences: As briefly touched, the insurance industry’s stance is an important adoption driver. Insurers dislike uncertainty, and violent incidents at venues can lead to hefty claims (for medical liability, property damage, event cancellation, etc.). If technologies like PSY Visor Cam demonstrably reduce the incidence or severity of such events, insurers may incentivize their use. For example, some insurance underwriters already ask venues about their security measures in detail; having AI screening could check a box that manual security can’t. There’s also the angle of counterterrorism: governments and insurers are extremely wary of terrorist attacks at mass events (which cause massive losses). While traditional counterterrorism focuses on weapons detection and intelligence, psychotype screening could add a novel dimension – potentially flagging a lone individual with deadly intent by their psychological tells. If PSY Visor Cam could even marginally improve detection of such threats, it would gain support from counterterror agencies and by extension the insurers who cover terror risk. We might soon see insurance policies that explicitly give premium credits to venues with certified advanced screening systems. Already, security consultants recommend businesses to upgrade cameras and live monitoring to get insurance benefits , and it’s likely this will extend to AI analytics as those become industry-standard.

Regulatory and Public Pressure: Adoption in Western countries is also shaped by public opinion and regulation. While there is momentum for biometric security, there is also vocal opposition from privacy advocates (as seen with UK fan groups opposing facial recognition at football matches and the EU Parliament discussing bans on AI mass surveillance ). This creates a dynamic where deployments need to be done carefully and with clear justification. PSY Visor Cam can actually position itself advantageously here: because it is focused on safety and can be configured to work without personal identification, it might be more palatable than traditional facial recognition. If Soulmatcher emphasizes compliance (e.g. operating within GDPR’s allowances for security interests, obtaining consent via ticket terms, and ensuring no permanent databases of faces), then regulators might view it as a more acceptable form of “biometric” tech. Venues will adopt tech that they believe they can defend to their patrons and regulators. Therefore, highlighting successful deployments where the community was informed and supportive will be key. One likely scenario is a pilot program with a volunteer sports team or arena in, say, Germany or France, where fans are notified and perhaps even surveyed. If that pilot shows improved safety and fan acceptance, it will greatly accelerate adoption elsewhere. The media narrative is important: Western press has done stories on, for example, how facial recognition spotted a few hooligans but raised privacy fears – the narrative for PSY Visor Cam should be how it prevented a dangerous fight or identified a person needing help, demonstrating clear positive outcomes.

Real-World Use Cases on the Horizon: Consider a use case from a European football match: A heated rivalry game in a packed stadium, historically a fixture with fan clashes. With PSY Visor Cam monitoring the turnstiles and stands, security control notices that a cluster of visiting fans entering early exhibits abnormal aggression cues (maybe elevated agitation and dominance behaviors). They dispatch additional stewards to that section preemptively. During the match, PSY Visor Cam flags a home fan near the visiting section whose facial expressions and gestures indicate growing anger (perhaps reacting to provocation). Security, alerted in real time, moves toward him before any physical incident – as it turns out, he was about to throw a punch at a taunting rival fan when guards intervened and de-escalated. Result: a potential brawl involving dozens is averted. This is not far-fetched; it’s the kind of scenario the system is built for. Another case in a US context: an NFL stadium parking lot after a game, where tempers sometimes flare. An array of cameras with PSY Visor Cam scans fans filing out. It flags a group of young men who look extremely agitated and confrontational. Security pairs that info with their knowledge of a nearby group from the opposing team’s fans and proactively separates them or guides them out different exits. Again, a preventive action thanks to AI insight.

Each success story will build momentum. It’s a virtuous cycle: the more venues that install advanced systems and publicly tout improved safety or fan convenience, the more others feel pressure to follow suit to not appear outdated or less safe. We saw this with metal detectors – once a few stadiums added them, virtually all major venues did within a few years because fans came to expect that level of screening. We are now at a similar inflection point with biometrics and AI. A survey of venue executives found their top priorities include biometric solutions and walk-through AI scanners in the near term . The same survey noted that generative AI hype is there, but concrete deployments are in biometrics and AI security. In fact, “walk-through security scanners” (like Evolv’s AI weapons detector) and biometric ID are high on tech deployment lists , showing that spending is aligned with security tech rather than gimmicks.

The Case for PSY Visor Cam: Given all these dynamics, PSY Visor Cam is entering the market at an opportune time. It addresses a real pain point – the need for proactive threat detection – which is not yet fully met by existing solutions. It does so in a way that can be made privacy-compliant and thus more socially acceptable in the EU/UK (where public tolerance for overt face recognition is lower). It complements the direction leagues and venues are already heading, adding a new capability that enhances the ROI of their other security investments (more catches means more value from those facial cameras, etc.). And it provides a strong narrative for stakeholders: Leagues can say they are championing innovation to protect fans; venue owners can reduce incidents and liability; event organizers can promise both safety and efficiency; insurers and regulators can be satisfied that cutting-edge risk mitigation is in place. Ultimately, Western adoption will hinge on demonstrated effectiveness and trust. PSY Visor Cam needs some early adopters to showcase success in the EU, UK, and US. Once those are publicized – e.g. “Stadium X saw a 30% drop in fan violence after implementing AI psychotype screening” or “Arena Y credits AI for preventing a major security incident” – we can expect a rapid uptick in interest across the industry.

In conclusion, the stage is set in Europe and North America for AI-driven screening systems to become a standard part of venue security. PSY Visor Cam stands at the forefront of this movement with its unique psychometric approach. Its social importance is underscored by the potential to save lives and make public gatherings safer. The market analysis shows a robust and growing demand for such technology, segmented across numerous venue types rich with opportunity. The competitive landscape, while populated with strong players, lacks a solution offering what PSY Visor Cam delivers, allowing it to differentiate and integrate synergistically. And the adoption trends – from sports leagues embracing biometrics to venues seeking risk reduction – all point toward a receptive environment for PSY Visor Cam in the Western world. By leveraging this momentum, aligning with ethical and legal standards, and clearly demonstrating its value, PSY Visor Cam can establish itself as a pivotal solution for public safety in the EU, UK, and US, capturing a significant market while contributing to a safer society.