Recommendation: Teams should adopt a three-tier verification protocol: (1) controlled reconstruction under recorded variables, (2) provenance cross-check against ≥2 independent archives, (3) statistical replication with a minimum n=5 and reported confidence intervals. Track elapsed time for each run, record what you know and what remains uncertain, and publish a short methods log with exact materials and measurement tolerances so others can reproduce metrics to within a stated margin.

Combine hands-on replication with artificial intelligence: hybrid pipelines where simulated brains assist pattern recognition and anomaly detection. Pilot implementations report manual sorting time reductions in the 25–35% range when models are limited to transparent architectures and strict holdout validation. Use different model families, disclose training corpora, and include failure examples so an intelligent assist does not obscure provenance or inflate apparent certainty.

Before public release, evaluate societal risks and set explicit boundaries: list sensitive elements, required redactions, and access tiers. Expect bright attention but manage expectations–popular narratives often overstate what replication results mean; a numerical match does not mean full equivalence. The tendency to prize novelty over repeatability is hard to reverse unless journals and funders mandate replication quotas and open repositories. Groups willing to preregister protocols and deposit raw logs accelerate cumulative knowledge and make it easier for others to live with uncertain results rather than treat them as finished answers.

Formulating Testable Historical Hypotheses from Archival Evidence

Convert a specific archival pattern into a falsifiable proposition: define independent and dependent variables, set temporal and spatial boundaries, and state a numeric threshold that distinguishes signal from noise (e.g., a 5% absolute increase or a kappa ≥ 0.75 for coded categories).

first, produce a one-sentence hypothesis that names measurement methods and expected direction (example: “If municipal ledgers undercount tax-exempt transactions, then documented exemptions per year will be 10% lower in Archive A than Archive B, controlling for population”). This forces you to stop selling narrative and start doing precise measurement.

Operationalize variables in a codebook with examples and decision rules; record tiny edge cases and manifold exceptions. Use dual coding on a random 10% sample; report raw agreement, Cohen’s kappa, and percent missing. A sensible threshold: kappa < 0.6 triggers re-training, 0.6–0.75 requires revision, >0.75 is acceptable for reporting. Be explicit about whats counted as an event and whats excluded.

Sampling and statistical power: base sample sizes on numbers observed historically, not intuition. For proportion tests, the table below gives approximate N per group for 80% power and α=0.05 across common baselines and detectable absolute effects; if effects are tiny you need manifold more files. Stop rules should be prespecified to avoid stopping soon after a lucky run; sequential analyses must use alpha correction.

| Baseline rate | Detectable absolute effect | Approx. N per group | Notes |

|---|---|---|---|

| 0.01 | +0.01 (to 0.02) | ~2,300 | rare events demand very large samples |

| 0.10 | +0.05 (to 0.15) | ~680 | common threshold for archival projects |

| 0.20 | +0.10 (to 0.30) | ~290 | moderate effects, feasible in many corpora |

Control for dating error and selection bias: quantify dating uncertainty as a probability distribution (e.g., ±2 years with p assignments) and run sensitivity checks moving events backward and forward within that distribution. Records are prone to institutional omissions; compare findings against other independent sources and metadata to estimate direction and magnitude of bias.

Address coder psychology explicitly: document handing of ambiguous entries, record coder confidence scores, and test whether confident-only subsets change results. If results depend on willing subjective judgments, convert those judgements into ordinal scales and report ordinal models alongside binary ones so readers can feel the stability of the effect.

Report numbers for every key claim: sample sizes, missing-data percentages, effect sizes, confidence intervals, and robustness checks. Provide at least two falsification tests that could have produced the same finding but did not – thats how you show the hypothesis isnt just storytelling. Be ready to answer whats necessary to refute your claim and what additional archives or metadata could push the conclusion back or forward.

Pre-registration and transparent code release make reviewers happy and make replication smarter and faster; describe code, extraction queries, and exact filtering steps. A short checklist to include with any paper: hypothesis sentence, variables + codebook, sampling plan with thresholds, inter-coder stats, sensitivity scans, and list of downsides and plausible alternative explanations. If youve done all that, readers will feel better about the conclusions and youll be better positioned to defend them.

How to translate a narrative claim into a falsifiable experimental question

Specify one measurable hypothesis with exact thresholds and a concrete test: e.g., “Among 18–35 y.o. weekly online shoppers, 30 minutes/day of Platform A content increases mean GAD-7 by ≥3 points versus Platform B after 14 days (two-sided t-test, α=0.05, power=0.80, n=70/group).” Mind the effect size and risk of false positives when you give numbers.

- Frame the claim as a single testable sentence: “If X then Y by at least Z within T days.” Use a single dependent variable, the exact instrument (GAD-7, CTR, conversion rate), an effect-size threshold (Z), and a time window (T).

- Operationalize variables precisely: define X (treatment exposure = 30±5 minutes/day, measured via server logs), define Y (mean GAD-7 score), and list inclusion/exclusion criteria for the whole sample. Specify how missing data are handled (e.g., last observation carried forward or multiple imputation).

- Determine sample size with explicit assumptions: specify baseline mean, SD, minimal detectable difference, α, power. Provide a worked number (example: baseline mean=8, SD=4, Δ=3 → n≈70 per arm). State whatever secondary outcomes exist and correct for multiple comparisons.

- Pre-register an analysis plan and decision rules: primary outcome, primary test (two-sided t-test/ANOVA/regression), covariates, handling of outliers, stopping rules. Record the exact model formula and the contrast youre testing so results are not floating.

- Run a pilot (n=20–30) to verify measurements: check that online tracking went smoothly, that participants live within target demographics, and that the instrument responds to changes. If pilot shows SD much larger than assumed, revise sample size.

- Specify falsification criteria and risk trade-offs: declare what pattern would falsify the narrative (e.g., mean difference <1 point with 95% CI excluding Δ≥3). Give the number of negative results required to settle or overturn the claim and list downsides of Type I vs Type II errors for stakeholders.

- Interpret results with pre-specified thresholds: report exact p-values, effect sizes, and 95% CIs; avoid vague language like “theyre small” or “big change.” If estimates are imprecise, state how many more participants would be needed to get a real answer.

- Example translation: Narrative – “Online feeds increase anxiety.” Falsifiable question – “Does 30 min/day exposure to Feed A vs Feed B increase mean GAD-7 by ≥3 points after 14 days among active users (n=70/arm)?”

- Checklist before launch: instrument validated, baseline balance checked, power calculation saved, randomization code available, monitoring plan to listen for unexpected harms, and a log of whats done and whats changed.

- Notes on inference: think about alternative mechanisms, list likely confounders, and report sensitivity analyses exactly (E-value, bias-corrected estimates). A falsified primary hypothesis does not solve every downstream question; some will remain floating or rarely fully solved.

- Behavioral cautions: theyre smarter to prefer simple, exact tests over compound narratives; think about ethical risk, consent language, and downsides of misclassification.

If you follow the template above, you give reviewers a precise protocol, reduce ambiguity about whats being tested, and make it hard to mistake exploratory signals for confirmed answers – so the claim can be settled or shown mistaken with transparent data.

Selecting comparable cohorts and counterfactuals from fragmented records

Require cohorts with ≤20% missingness on pre-specified covariates, temporal precision ≤±30 days, post-adjustment standardized mean differences (SMD) <0.10, propensity-score caliper 0.2 SD, minimum N=50 per arm (prefer ≥200 for subgroup checks) and overlap (common support) >0.6; this isnt a guideline you can relax without re-running sensitivity analyses.

Inventory fragmentation by kinds (truncated records, intermittent reporting, delayed linkage), quantify median inter-record gap, percent censored, and linkage error rate; tag metadata fields and other stuff along the record so provenance is a searchable word for automated filters. Getting clean matches likely involves probabilistic linkage plus manual review of top 5% mismatch scores; flag them, then drop if linkage probability <0.7 or if manual review simply confirms a false link, as said in protocol revision notes.

The smartest single-metric selection often leads to overfitting: mathematicians crave a single-number first answer but the real world first answer isnt robust and eventually that selfish optimization – putting fit ahead of causal plausibility for reasons that seemed great – is mistaken and produces problems. Successful selection balances numerical balance and contextual plausibility, documents trade-offs, and records why each exclusion or match decision was made.

Action checklist: 1) Pre-register covariates, minimum thresholds and missingness cutoffs; 2) Run overlap diagnostics and report SMDs before/after weighting; 3) Set caliper=0.2 SD, replacement allowed only if matched sample remains representative; 4) Require linkage error <5% or sensitivity analysis modeling mislinking; 5) Report date precision (median lag, IQR) and perform time-window sensitivity (±15, ±30, ±60 days); 6) Archive match keys, scoring thresholds, and manual-review notes to enable replication; 7) For final causal claims, run at least three counterfactual specifications and present effect heterogeneity by data-quality strata.

Operationalizing variables: converting qualitative descriptions into measurable indicators

Define each qualitative concept with a one-sentence anchor and three measurable indicators: (1) a behavioral count (number per 10,000 or per 1,000 words), (2) an ordinal score 0–5 with explicit cutpoints, (3) a contextual ratio (per capita, percent change over last 12 months).

Create a coding rubric containing: label, short definition, inclusion/exclusion rules, examples, edge cases, and a decision rule for ambiguous items. If theyve used terms like “high trust” or “deep commitment,” your rubric must state whats coded as 4 versus 5 (e.g., sentiment >0.6 AND mention frequency >5 per 1,000 words => score 4).

Operational thresholds: ordinal scales use integers 0–5 where 0 = absent, 1 = mention only, 2 = weak evidence, 3 = moderate, 4 = strong, 5 = dominant. For behavioral metrics specify absolute numbers (e.g., clinics per 10,000 population; wait time in days). Target interrater reliability: Cohen’s kappa ≥ 0.70 for nominal items, weighted kappa ≥ 0.75 for ordered scores. Use spearmans rho to test rank correlations between coders; aim rho ≥ 0.60.

Pilot and training protocol: pilot code n = 30–50 units; two coders independently for pilot; scripts for training should include 20 annotated examples per code and a 60–90 minute calibration session. After pilot, calculate percent agreement and kappa, revise definitions, then run a second pilot of 50–100 units. Last reconciliation step: a third senior coder resolves ties and logs reasons.

Construct composite indicators with clear aggregation rules: arithmetic mean for equally weighted ordinal items, or weighted sum if empirical weights from factor analysis exist. Require Cronbach’s alpha ≥ 0.70 for composites; if alpha < 0.60, drop or rework items. For criterion validation, correlate composite with external measures that predict the same outcome (e.g., access indicator should predict health service utilization; report Pearson or spearmans correlations and regression beta with SE).

Automate where feasible: use tokenization and lemmatization scripts to extract counts, sentiment models to assign polarity scores, and regex for structured mentions (dates, numbers). When using automated extraction, report precision, recall, F1 on held-out manual set (target F1 ≥ 0.80 for core labels). Document full pipeline and all parameter values so others can reproduce results.

Missing data rules: if item-level missing <10% per unit, impute with item mean or model-based imputation; if >30%, treat composite as missing. Sensitivity checks: report results with and without imputed values, and with items dropped one at a time. Specify how you handle boundary cases (e.g., phrases that suit multiple codes): assign primary code, annotate secondary code, and preserve both in a multilevel dataset.

Validation examples: convert the claim “community has great support for clinics” into: count of support mentions per 1,000 words = 12 (behavioral), ordinal support score = 4 (strong), percent positive comments = 78% (contextual). Use regression to predict utilization; report coefficient, p-value, and R-squared. If model fails to predict or coders are disappointed by agreement, revisit definitions and re-run the pilot.

Use clear reporting tables: variable name, label, type (ordinal/continuous), unit, cutpoints, coder agreement, number of observations, missing percent, validation correlation. Here are quick cutpoint examples: 0–1 absent/weak, 2–3 moderate, 4–5 strong/dominant; use these across related constructs to keep boundaries consistent across the project.

Practical notes: limit initial indicator set to 6–12 key measures to avoid dilution of signal; when translating subjective achievements or views into numbers, document the translation rule and preserve original quotes for qualitative follow-up. Consider mixed approaches (manual coding + automated smarts) so you never lose nuance while gaining scalability.

Practical sampling strategies when archives are incomplete or biased

Allocate sampling effort using a 60/40 stratified rule: 60% targeted retrieval of under‑represented series, 40% systematic sampling of dense series; set a minimum of 30 records per stratum and recalculate stratum weights after every 100 retrieved items. The practical answer: use capture–recapture for total holdings estimation where N_est = (n1 × n2) / m (example: n1=120, n2=80, m=30 → N_est≈320).

Start with a 30‑item pilot to estimate total volume and variance; at times pilot variance will change k (systematic step) by ±25%. Stratify on date, provenance and file type (10‑year bins for 1800–1950, 5‑year bins thereafter) and require at least five independent file identifiers per bin. Flag series with incomplete catalogs – theyre the priority for targeted retrieval; attention to catalog gaps reduces systematic problems in analysis.

Combine systematic drawing with targeted over‑sampling: compute k = floor(total_est / desired_n) for the systematic arm and allocate the targeted arm to reach quota shortfalls. If you dont know total_est, use the pilot to set k and then adjust after each batch of 100. Theyll hurt inference more than balanced over‑sampling if you over‑weight a single creator or folder.

Test representativeness quantitatively: run Kolmogorov–Smirnov on date distributions, chi‑square on provenance counts and compute a Gini index for item frequency. Use 1,000 bootstrap replicates to produce 95% CIs for key proportions; if K–S p<0.05 or CI widths exceed ±7 percentage points, add 30–50 items to the deficient strata. Youll document any midstream rule changes and the exact number of added items.

Document every operational decision: write a one‑page audit per batch listing inclusion/exclusion rules, file‑level definitions, who made each decision, timestamp, and any protocol changes. Record personal judgment notes separately from coded variables so future analysts can answer why choices were made and replicate adjustments.

Cross‑check with external sources: use contemporary newspapers, trade directories or catalog registries as partner datasets; compute overlap rate (matches per 100 sampled items). If theyve yielded fewer than five matches per 100, widen matching tolerances or search additional sources. Track the number of unmatched items and classify mismatch reasons.

Assume heuristics can be mistaken: the smartest heuristic is testable, a smarter approach is to validate rules on a withheld 10% validation set and stop using heuristics that reduce coverage by >15%. Do not merely rely on catalogs – sample filenames, marginalia and accession logs; else systematic omissions remain hidden.

Set operational caps: hard cap per series (e.g., 500 items) to avoid domination; if you need more, stratify within the series by decade. If you hit privacy limits or partner restrictions, redact identifiers and record a redaction flag rather than dropping records, so downstream users know what was removed and why.

Recreating Historical Practices: Protocols for Material and Performance Experiments

Run a minimum of three full replicate material runs per protocol with n=5 specimens per run, over at least 2 years, logging temperature at 15-minute intervals and relative humidity at 30-minute intervals; perform a destructive test at week 4, month 6 and at the 24-month mark to capture both early and late failure modes.

For material work document provenance fields: source, batch number, tool geometry (mm), firing or curing schedule (°C and minutes), and microstructure imaging at 200× and 1000×. Prepare three control batches without additives and three with historically sourced additive; this makes direct comparison valid and helps solve specific degradation modes which otherwise remain ambiguous. Use intelligent randomization for specimen assignment to reduce operator bias.

When reconstructing performative procedures schedule multiple live reconstructions with fixed durations (15, 45, 120 minutes) and standardized prompts; instrument performers with lightweight accelerometers, contact microphones, and heart-rate monitors; record from three calibrated camera angles and A/B compare recorded sound levels. Maintain a floating roster of two backup performers and assign one colleague as safety lead to accept responsibility for consent and welfare. They should sign role sheets before each session.

Data capture standards: timestamped JSON logs for sensor streams, WAV at 48 kHz for audio, 4K video at 30 fps, and SHA-256 checksums for each file. Archive raw and processed files in two geographically separate repositories; include a changelog that records the last protocol edit, next planned edits, and exact seed numbers for any stochastic simulations. Use automated unit tests for analysis scripts and retain previous specimen fragments when possible.

Interpretation protocol: dont collapse qualitative testimony into conclusions without cross-checks; listen to participant reports but weigh them against metric trends. If you wonder why a replication diverges, run targeted diagnostics that probe deeper causal links and then repeat only the failing step. Settle contradictions with controlled follow-ups rather than narrative synthesis; avoid treating testimony as merely anecdote.

Metadata requirements: record exact start and stop times, the last preparatory action before a test, environmental logs for the full duration, and a provenance chain that captures any transfer between labs. Use persistent identifiers (DOI) for datasets, and include author contribution statements that list multiple roles. Everything recorded must be retrievable for at least five years and clearly dated.

Risk and limitations: quantify downsides such as sample destruction rate, observer fatigue (measure via repeated reaction-time tests), and instrument drift (schedule calibration every 100 hours). Give credit with citable data packages and acknowledge colleagues who supplied materials or expertise; thats the right practice for reproducibility and for assigning credit that makes future cooperation feasible.

Designing reproducible replication protocols for pre-industrial technologies

Specify a single, well-defined protocol file (text + checklist + raw-data template) and commit to run a minimum of 5 independent builds per experimental condition with n=10 physical units each; record 40 mandatory fields per unit (materials, batch number, dimensions, mass, moisture %, ambient temp, fuel type, operator ID).

- Version control and provenance

- Store protocol in plain text and pdf with semantic version (e.g., v1.2.0) and timestamp; preserve original procurement invoices for materials (supplier, lot, year) and link them to each unit.

- Label artifacts with unique IDs and QR codes; scans and photos (RAW) stored alongside protocol. Treat failed runs as data – mark as "fail" and include cause codes (A1..A9).

- Material specification (concrete)

- Clays: report particle size distribution (D10, D50, D90 in µm). Tiny shifts of ±50 µm in D50 change firing shrinkage by ~1.2% (empirical baseline).

- Fibers/textiles: report linear density (tex), twist per meter, humidity at test time (target 12% ±1%).

- Metals: report alloy composition by weight %, slag chemistry, and preheat temperature. For bloomery smelts report charcoal type and porosity (%).

- Geometry and standard test pieces

- Use at least one standard geometry per tech to reduce shape bias–examples: disk 50 mm Ø × 10 mm thick for ceramics; a 120 mm long, 10 mm Ø bar for wrought iron tensile-like tests; a pretzel-shaped token (30 mm) as a rapid visual standard for surface finish comparisons.

- Record dimensional tolerances to ±0.5 mm and mass to 0.1 g.

- Process control

- Log furnace/oven temperature every 30 seconds with probe accuracy ±1 °C. For kilns state preheat, ramp rates (°C/min), soak times, and cooling profile. Example: ramp 60 °C/hr to 800 °C, soak 60 min, reduce 100 °C/hr to 300 °C.

- Fuel load by mass (g) and by calculated energy (kJ). Note operator interventions and precise timestamps; avoid using subjective descriptors like "hot" unless quantified.

- Operators and procedural fidelity

- Record operator ID and prior experience in years; limit each condition to at most two operators to reduce personal technique variance. Rotate operators across conditions to detect operator effects.

- Blind assessment: have at least two independent evaluators score surface quality, function, and appearance on an ordinal 1–5 scale; use spearmans rho to compare rank scores against quantitative metrics (density, hardness).

- Data management and templates

- Provide a CSV template with mandatory columns and examples. Backups: keep three copies (local, off-site, cloud) and checksum each nightly.

- Publish metadata: equipment model, calibration date, and sensor serial numbers. Include photos of each step with scale and a tiny ruler for micro-scale reference.

- Статистический план

- Предварительно зарегистрируйте первичные и вторичные результаты и пороги для признания репликации успешной. Используйте alpha = 0,05, целевую мощность 0,8; для порядковых сравнений анализируйте с использованием коэффициента Спирмена и сообщайте rho с 95% доверительным интервалом.

- Сообщайте о полных распределениях и необработанных числах, а не только о средних значениях. Представляйте процент брака по партиям и операторам; отрицательная тенденция (процент брака >30%) запускает пересмотр протокола.

- Чек-лист воспроизводимости (использовать при каждом запуске)

- Материалы соответствуют номера лотов: да/нет

- Размеры в пределах допуска: да/нет

- Калибровка датчика ≤30 дней: да/нет

- Все фотографии загружены с идентификатором: да/нет

- Комментарии оператора сохранены: да/нет

- Режимы отказа и итеративные обновления

- Регистрируйте коды первопричин и корректирующие действия; отслеживайте закономерности между запусками (оператор, материал, окружающая среда). Получение большего, чем ожидалось, количества отказов в первые 10 запусков означает остановку, устранение неисправностей и документирование изменений перед продолжением.

- Ведите журнал изменений: каждое исправление должно быть задокументировано с обоснованием и ожидаемым размером эффекта. Не оставляйте неофициальные заметки; относитесь к протоколу как к живому юридическому документу.

- Коммуникация и доступность

- Публикуйте протоколы с данными и машиночитаемой метаданными под открытой лицензией; предоставляйте краткое резюме воспроизводимости для неспециалистов, в котором указаны точные расходные материалы и модели оборудования.

- Для уточнения вопросов предоставьте контактную информацию, включая вашу институциональную принадлежность и часы приема. Разумным будет считать личный ответ в течение 14 дней.

- Практические примеры и цифры

- Керамический тест: n=50 плиток в 5 партиях; D50 = 180 мкм; режим обжига: 30 °C/час до 1000 °C, выдержка 90 мин; измеренное снижение пористости 8.5% (SD 1.1%).

- Текстильный краситель: 6 повторений, влажность волокна 12% ±0.5; ванна для крашения 60 °C в течение 45 мин; средний балл светостойкости 4/5; коэффициент Спирмена между концентрацией красителя и светостойкостью = 0.72 (p=0.008).

- Металлургическое плавка: 5 испытаний, средняя масса цветка 1.3 кг (SD 0.2); угольный тип A произвел 18% более высокий выход, чем B, из-за более высокой теплотворной способности.

Если вы хотите изучить обратные компараторы, включите один современный контрольный запуск на партию и укажите причину его выбора; по словам журнала, в нем должны быть записаны как отрицательные, так и положительные исходы. Примите, что некоторые процедуры являются сложными и потребуют итераций протокола — планируйте 2–3 цикла пересмотра в течение первых 12–24 месяцев или количества запусков (~100), в зависимости от того, что наступит раньше.

Экспериментальная история – Методы, тематические исследования и идеи">

Экспериментальная история – Методы, тематические исследования и идеи">

Козависимость в Отношениях – Признаки и Советы по Восстановлению">

Козависимость в Отношениях – Признаки и Советы по Восстановлению">

Я люблю своего парня, но пора ли расставаться? 10 признаков и как принять решение">

Я люблю своего парня, но пора ли расставаться? 10 признаков и как принять решение">

Мы с одним и тем же парнем встречаемся? Тёмная сторона онлайн-групп.">

Мы с одним и тем же парнем встречаемся? Тёмная сторона онлайн-групп.">

Могу ли я быть любимым, если я не люблю себя? Самооценка и отношения">

Могу ли я быть любимым, если я не люблю себя? Самооценка и отношения">

Является ли переписка изменой? Объяснение переписки, приводящей к измене, в Facebook">

Является ли переписка изменой? Объяснение переписки, приводящей к измене, в Facebook">

3 Эффективных Сообщения, Чтобы Написать Женщине Без Био в Приложении для Знакомств">

3 Эффективных Сообщения, Чтобы Написать Женщине Без Био в Приложении для Знакомств">

Почему люди ведут себя плохо в приложениях для знакомств – причины, психология и решения">

Почему люди ведут себя плохо в приложениях для знакомств – причины, психология и решения">

Почему мужчины не задают вопросы – руководство для одинокой женщины">

Почему мужчины не задают вопросы – руководство для одинокой женщины">

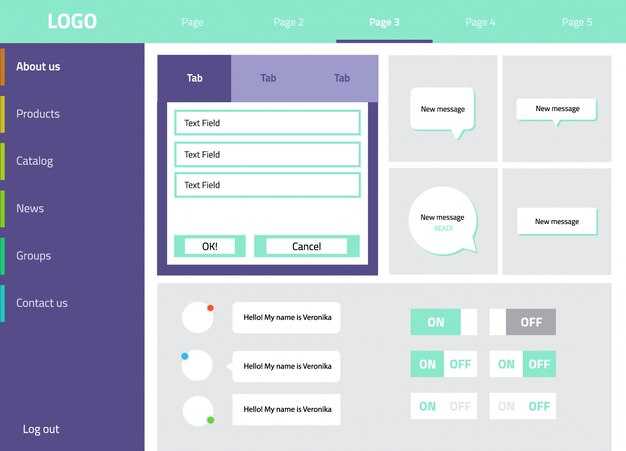

Окно диалога – UI-дизайн, примеры и лучшие практики обеспечения доступности">

Окно диалога – UI-дизайн, примеры и лучшие практики обеспечения доступности">

Преодоление созависимости – практические советы, чтобы освободиться">

Преодоление созависимости – практические советы, чтобы освободиться">